This is the 2nd blog post of a series. Please check out the previous one to understand Kubernetes APIs and their relations.

In this 2nd blog, we’ll see Kubernetes APIs and CSI Driver features in action using the MongoDB ReplicaSet setup. In addition, I’ll give some of the performance and high-availability tips. Firstly, let’s clone our demo repository.

|

git clone git@github.com:yusufcemalcelebi/running-db-on-eks.git |

Infrastructure Provisioning

We’ll use a terraform folder to create an EKS Cluster and related configurations.I used terraform-aws-modules/eks/aws module to create the EKS cluster and related components. Additionally, the IAM Role for Service Accounts(IRSA) provides AWS-level permissions to the pods. Check out this amazing blog post to learn more about IRSA.

The Terraform module creates an EKS cluster with 1.23.x version. It sets up some required components using the cluster-addons feature. Creating the EBS CSI driver will be so much easier this way. First, you must edit the locals.tf file and put your VPC-related information into it.

|

locals { |

Now our terraform configuration is ready, and we can apply it. I’m going to use the AWS Credentials to authenticate for the sake of this demo but you can use your own approach for example role-based access.

|

export AWS_SECRET_ACCESS_KEY="****************" # use your AWS Credentials |

It’ll take around 20 minutes. Retrieve the kubeconfig file using the following command:

|

aws eks --region eu-west-1 update-kubeconfig --name tf-demo |

Volume Provisioning Testing

Now, we have an EKS cluster with 3 worker nodes so, let’s check that the CSI Driver pods and dynamic-provisioning of volumes are working properly. The test case will include these steps:

- Creating the StorageClass to provide the dynamic-provisioning feature. This will use the EBS CSI Driver to allocate an EBS volume with gp3 type

- Creating a PersistentVolumeClaim to bind the PersistentVolume to Pod

- Creating a Pod and referring to the PVC and mounting the Volume to a specified path inside the container.

“gp3, a new type of SSD EBS volume that lets you provision performance independent of storage capacity, and offers a 20% lower price than existing gp2 volume types.” - AWS Blogs

Apply the YAML files inside the dynamic-provisioning-example folder.

|

kubectl apply -f ./dynamic-provisioning-example -n default |

- Check the PersistentVolume status and make sure it’s Bound.

- Go inside the pod and check the Volume directory content to be sure it’s writing successfully.

|

kubectl get persistentvolume |

I hope, you have reached this point with a proper setup. If you couldn't, it's okay. When I was setting up this demo, I faced a lot of issues. You should know where to check if you’re having problems with Volume Provisioning. I was sure the StorageClass was created and I checked the ebs-csi-controller logs. It showed up but, there was a problem with the Pod permissions.

|

ebs-csi-controller-8585db468-qq69b csi-provisioner caused by: AccessDenied: Not authorized to perform sts:AssumeRoleWithWebIdentity ebs-csi-controller-8585db468-qq69b ebs-plugin caused by: AccessDenied: Not authorized to perform sts:AssumeRoleWithWebIdentity |

The service account name was wrong on the IAM Role Trusted Entities. It worked finally when I fixed it.

You can set ebs-gp3-sc StorageClass as a default one. When the configuration YAML doesn’t specify the StorageClass, it’ll automatically use this default. On EKS, gp2 is the default SC so, let’s remove it and set our custom SC as a default one.

|

kubectl patch storageclass gp2 -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}' kubectl patch storageclass ebs-gp3-sc -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}' |

Deploying MongoDB Cluster

MongoDB supports two different architectures: Standalone and Replicaset. Replicaset provides high availability for production DB setup. So I'll use MongoDB Replicaset architecture to demonstrate the features. Let’s deploy it using bitnami/mongodb Helm chart. We need a secret resource to set the root password and replica set key.

|

kubectl apply -f ./mongodb/mongodb-custom-secret.yaml # create the secret |

MongoDB will have 3 replicas in total. Kubernetes creates replicas in order, starting with the lowest ordinal. One of them will be primary and the others will be secondary nodes. I’m using mongosh CLI to connect to the MongoDB replicas. You can use the following commands to access the replicas:

|

kubectl port-forward pod/mongodb-0 27017:27017 |

As a default, Primary gets the read and write requests. If you want to use Secondary Replicas for the read requests, you should configure it on the secondary node using the following command.

|

rs0 [direct: secondary] test> db.getMongo().setReadPref("secondaryPreferred") |

You can insert data to test whether the replicas are in sync or not. First, insert data on the primary.

|

rs0 [direct: primary] test> db.myTest.insertOne({isSync: true}) |

Now check the secondary node and make sure the inserted data exists on it.

|

rs0 [direct: secondary] test> db.myTest.find() |

Operations on MongoDB Instance

The database management requires some basic operations. On a cloud-provided solution, these features will be one click away, but you must set them up manually on Kubernetes.

Of course, having database automation, release management, or CI/CD pipeline for your databases would be better instead of manual operations. We are a partner of DBmaestro, and with DBmaestro, you can achieve you can bring DevOps practices for Databases.

Snapshot

Firstly, we must create the CRDs and controllers for the snapshot operation. I added YAML files to the demo repository but you can follow official documentation if you prefer.

|

kubectl kustomize database-operations/external-snapshotter/crd | kubectl create -f - |

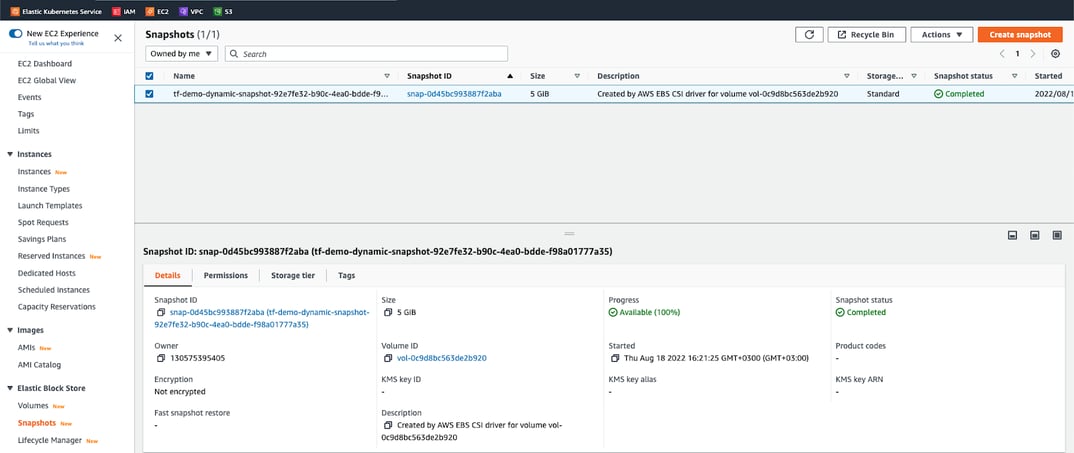

Now we can use the VolumeSnapshot resource to take snapshots of the volumes. You can also use the AWS Console to check the EBS snapshots.

|

kubectl apply -f database-operations/snapshot-class.yaml |

You can set up a CronJob to take snapshots periodically and you can delete VolumeSnapshot resources older than your retention time.

Resizing

As a best practice, take a snapshot of the volumes before starting. You can only increase volume size not decrease it. After modifying a volume, you must wait at least six hours and ensure that the volume is in the in-use or available state before you can modify the same volume. If you check the StorageClass resource, you’ll see it has a field to allow volume expansion. You should have that to apply the resizing operation. Now, let’s edit the PVC resources and set the new size. You should change the .spec.resources.requests.storage field. I’ll do this operation for all PVC resources.

|

kubectl edit -n default pvc datadir-mongodb-0 kubectl edit -n default pvc datadir-mongodb-1 kubectl edit -n default pvc datadir-mongodb-2 |

You can connect to one of the pods and run the df -h command to make sure the volume is expanded on the OS level.

Encryption at Rest

As a security best practice, you can store your data encrypted at rest.

Encryption at rest is like storing your data in a vault. Encryption in transit is like putting it in an armored vehicle for transport.

You must add the encrypted: "true" parameter to the StorageClass to enable encryption at rest. Additionally, if you don’t specify the kmsKeyId parameter AWS will use the default KMS key for the region the volume is in.

Encryption In Transit

There is no one-click-away solution to enable TLS for MongoDB connections. If you set the tls.enabled value to true on the Helm chart, it’ll generate a custom CA and self-signed certificates using an init container named generate-tls-certs. If you use a self-signed certificate, although the communications channel will be encrypted to prevent eavesdropping on the connection, there will be no validation of the server identity. So it’s not the recommended way in the Production environment.

Another alternative might be deploying the cert-manager and managing certificates on the Kubernetes cluster. You can pass the certificate-related parameters to the Helm chart.

“cert-manager adds certificates and certificate issuers as resource types in Kubernetes clusters, and simplifies the process of obtaining, renewing and using those certificates.” cert-manager.io

Service mesh solutions might be another alternative to enable secure communication in the Kubernetes cluster. Istio provides that feature out of the box.

Monitoring

If you set the metrics.enabled value to true, it’ll enable the metrics using a sidecar Prometheus exporter on each pod. Additionally, you can configure the ServiceMonitor resource using the MongoDB Helm chart values to scrape metrics. Check out the available metrics with the following commands.

|

kubectl port-forward pod/mongodb-0 9216:9216 |

You can have a look at some Prometheus alert examples from this amazing page. https://awesome-prometheus-alerts.grep.to/rules.html#mongodb

High-Availability

You should run each Mongodb pod on a different worker node to increase availability. If you want to enforce this, set the “podAntiAffinityPreset” to “hard” on the Helm chart. Make sure you have enough worker nodes or cluster-autoscaler configured properly. Another option for High-Availability is running each pod on a different Availability Zone. You can use the node affinity feature to distribute pods across AZs.

“Failures can occur that affect the availability of instances that are in the same location. If you host all of your instances in a single location that is affected by a failure, none of your instances would be available.” docs.aws.amazon.com

Lastly, I strongly recommend using a PodDisruptionBudget resource to prevent managed disruptions for your databases. Set the PDB with maxUnavailable=0. The Helm Chart has required values for that.

Persistent Volume Topology Awareness

One important point while working with multiple AZs is that EBS volumes must be attached to instances that run on the same AZ with the volume. Therefore, there is a field on the PV to enforce this AZ constraint for the PersistentVolume and the Pod. It’s automatically handled, so you don’t need to add it.

|

Node Affinity: |

Let’s say a worker node that runs one of the MongoDB pods crashes. Because of the specified Node Affinity on the PV, the scheduler will search for an available worker node on the eu-west-1 zone to run the MongoDB pod. If you don’t have any available spots, the pod will be stuck in pending status. You might expect that the cluster-autoscaler will provision another node on the eu-west-1 but it won’t if it has not yet reached the upper scaling limit in all zones. This limitation can be solved if you use separate node groups per zone. This way CA knows exactly which node group will create nodes in the required zone rather than relying on the cloud provider choosing a zone for a new node in a multi-zone node group.

Karpenter is another alternative to solve this problem out of the box. If you use Karpenter instead of cluster-autoscaler, it applies Volume Topology Aware Scheduling so you don’t need to worry about the AZ constraints of your EBS volumes.

Conclusion

In this blog post, I have tried to show you some best practices while managing a database on the Kubernetes cluster. It’s not an easy task but I have learned a lot making it happen. Please share if you think it’ll be useful for others and let me know if you have any questions.